This is the final week before product submission. The class work from last week was a render of 3D model we were working on. In the morning, we ran through submitted renders of our projects with our class tutor.

This first render I submitted has a lot of issues that need to be addressed. Putting the technical faults aside, composition wise, the monorail tracks were not laid out with aesthetic sense in mind. While it would be logical to have a station above the current DLR stop for interchange, this would be difficult to create, as lighting in the scene would change drastically. For this reason, I have decided to change the direction of the tracks, which now make a cross intersection few meters away from the lift shafts.

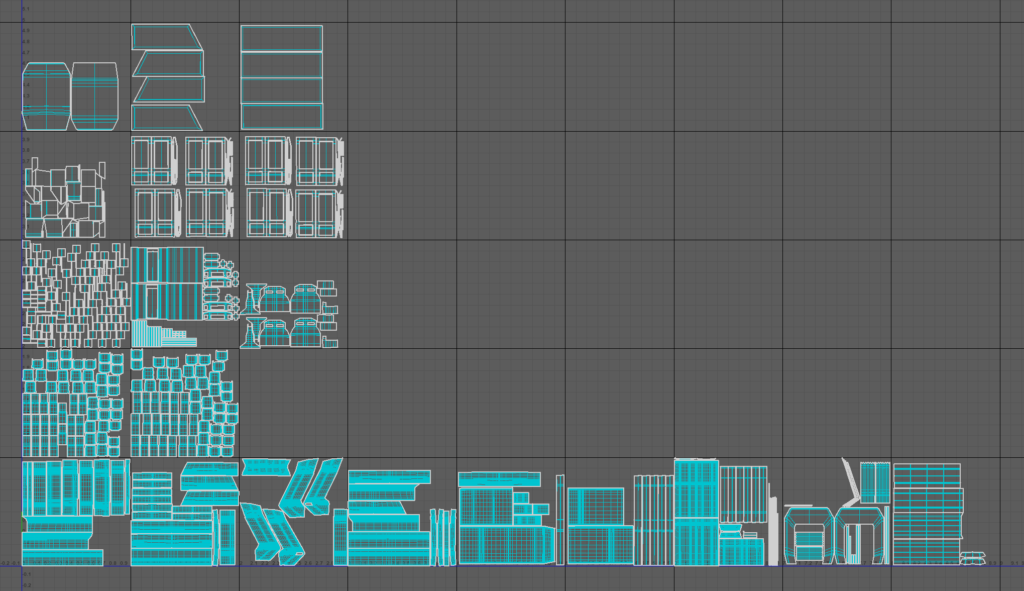

Train UV Unwrapping

In the afternoon, I was unwrapping UVs for the train model. The model was fairly complex, and the UVs took around 5 hours to create. I used multiple UDIM tiles, and intend to texture in 2K.

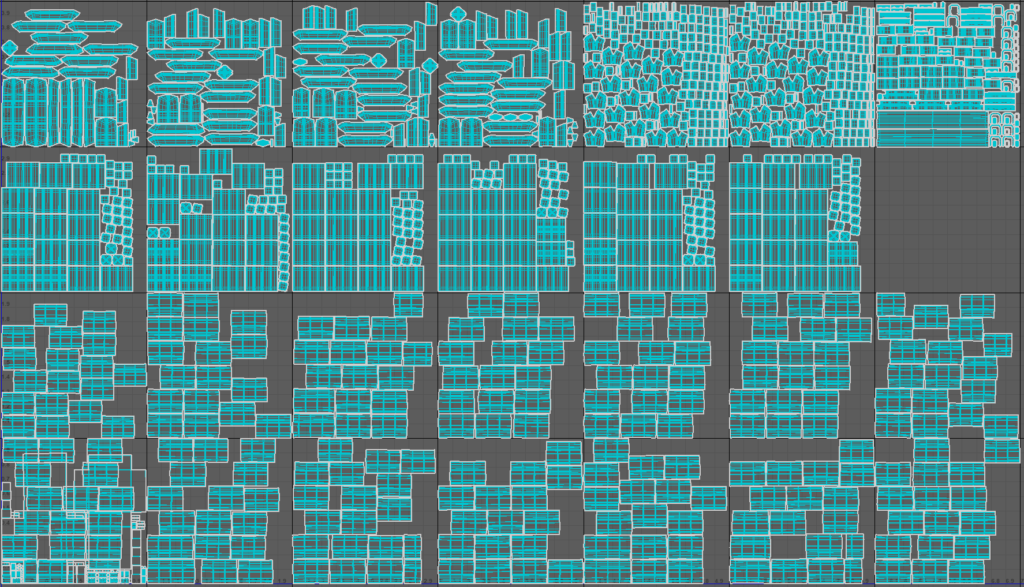

Track UV Unwrapping

This was a lengthy process, as the UV shells were really heavy to work with. However, in the end I was satisfied with the result.

Project Pipeline

- Timeline export from Nuke as .png image sequence for use in 3D Equalizer

- Sequence export in ACES for better results with colour and dynamic range, also simplifying cross application workflows (all software uses the same colour space, eliminating differences in materials Substance Painter to Arnold etc)

- 3D Equalizer track and solve with exactly surveyed points using measurements from Google Earth and wikipedia (track gauge, platform length, elevator shaft width) – all of these measurements used in maya to scale the scene as well.

- 3DE to Maya (.mel export) of point cloud and solved camera with dewarped (undistorted) footage, using frame offset to offset the sequence and image scale in maya to compensate for the added pixels from overscan, also using lower bitrate .png files for maya to play more smoothly

- Creating proxy geo in Maya for better scene orientation and later use (masks, roto)

- Modelling and texturing in different maya projects for better scene and asset management, then referencing these scenes in the main maya project

- Using motion paths in maya to lay out the track

- UV creation using udim tiles and texel density to properly scale all objects to scene scale, optimizing all of this for further smoothing (adding edge loops where needed etc.) Distributing UVs to udim tiles depending on the material

- Creating UVs before duplicating objects and laying some of them in the scene (pillars, track, train, etc.)

- Catclark smoothing on render with Arnold parameters to keep work in viewport comfortable

- Maya to substance painter: .fbx smooth or original mesh export depending on the shape and LOD needed, creating materials as PBR Metallic Roughness

- Baking texture maps for each model, minding resolution – LOD relation, using own settings (learned in class) for baking to increase quality of materials

- Creating materials with references to real world materials

- Adding textures and individual details from real world references on top of materials and generators

- Anchor points for surface effects, wear & tear

- Exporting textures from Substance Painter in targa format

- Applying these textures as UDIMs to aiStandardSurface material in Maya

- Lighting using Arnold lights and HDRIs

- Lookdev – setting up maya layers, AO, lighting setups and preparing the scene for rendering

- Setting up rendering AOVs – multichannel exr with render and utility passes

- Diffuse – Direct,Indirect, Albedo

- Specular – Direct,Indirect

- Transmission pass

- Coat

- Alpha pass for the geometry

- Depth pass / Z

- N Pass

- P Pass

- ID Pass – track, and trains separately – render layers

- Motion Vectors – render layer

- Shadow Mask

- Shadow Diffuse

- Ambient Occlusion – render layer

- Using ACES colorspace to render image sequence for easier integration in Nuke and richer colour and luminance information

- 3DE to Nuke Lens distortion node export

- Slap comp in Nuke for final submission, leaving advanced composting for next module.